More Than Carbon: The Full Environmental Footprint of LLMs

Sophia Falk

When discussing AI's environmental impact, the conversation typically focuses on one metric: carbon emissions resulting from AI’s increasing energy consumption. Headlines constantly warn about the massive energy consumption of training large AI models, and the International Energy Agency projects that electricity demand from data centers worldwide will more than double by 2030, reaching levels comparable to Japan's entire current consumption. While the energy consumption is a reason for concern, this ‘carbon tunnel vision’ misses a much larger and more complex environmental story.

The environmental footprint of AI: Beyond the carbon tunnel vision

Training large-scale AI models with billions of parameters requires immense computational power to process vast amounts of data. This is where Graphics Processing Units (GPUs) become essential. Originally designed for video games, GPUs have a fundamentally different architecture from central processing units (CPUs). While CPUs have a few powerful cores for sequential tasks, GPUs contain many smaller cores designed for parallel processing. This makes GPUs ideal for AI training, which involves millions of simultaneous matrix calculations. The Nvidia A100, the workhorse behind major AI models such as GPT-4, revolutionized AI training when it was released in 2020, facilitating the training of much larger and more complex models than ever before.

However, current environmental assessments focus primarily on measuring the energy consumption of these GPUs during AI training without considering the embodied impacts of the hardware itself. This is a critical aspect: Before GPUs can be plugged into a data center, resources must be extracted, processed, and assembled into their respective hardware components. Each of these steps carries significant environmental costs. Eventually, after a relatively short operational lifespan of only 1-3 years (Ostrouchov et al., 2020; Grattafiori et al., 2024; Karydopoulos & Pavlidis, 2025), these GPUs become electronic waste. Each of these life cycle stages results in environmental impacts that carbon accounting alone fails to capture.

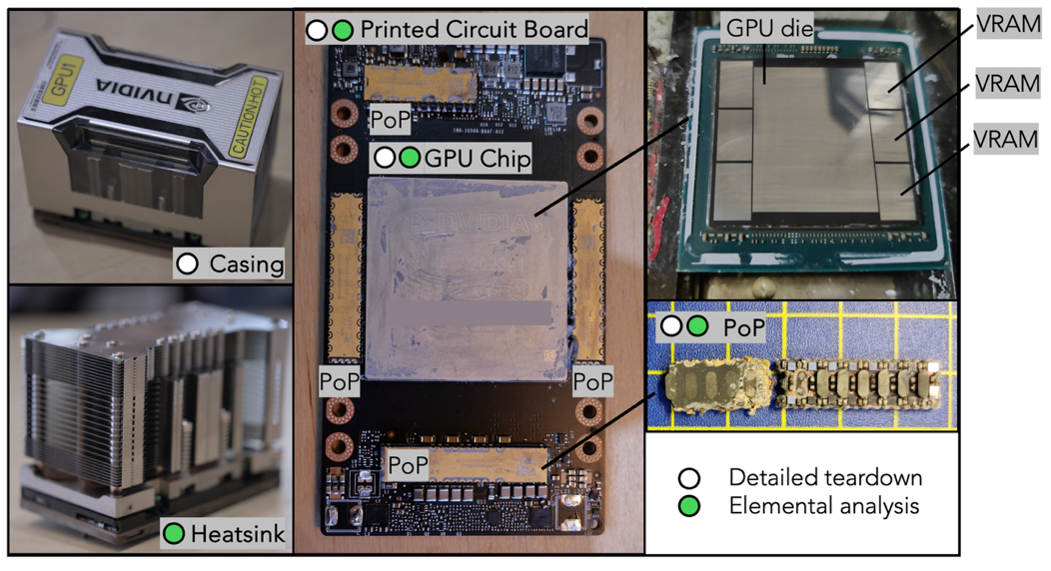

Figure 1. We first disassembled the Nvidia A100 SXM 40 GB GPU into three major component groups: the casing, the heatsink, and the printed circuit board (PCB). We then further analyzed the internal architecture of the largest integrated circuits: the GPU chip with its memory (VRAM) , the electric current regulators and the Power-on-Packages (PoPs). Image taken from Falk et al. (2025) with permission.

The data gap problem

The reason why most environmental assessments so far could not include impact categories beyond carbon emissions is due to limited data availability. The few studies that included additional life cycle stages and impact categories had to rely on secondary and proxy data – using existing life cycle assessment (LCA) results of ‘similar enough’ consumer electronics or generic estimates from available LCA databases.

Faced with this blind spot, we chose a different path. Instead of relying on proxies, we went out into the field and gathered the data ourselves. We acquired a Nvidia A100 GPU and took it apart. After disassembling it, we measured every component, ground up materials, burned samples, and used emission spectrometry to determine the exact elemental composition of the individual parts (see Fig. 1). With this newly gained information, we conducted a comprehensive environmental assessment of AI training across 16 different environmental impact categories from cradle-to-grave (see Fig. 2), ranging from human toxicity to resource depletion to ecosystem damage.

Figure 2. System boundaries in life cycle assessments. The cradle-to-gate scope covers resource extraction, processing, upstream distribution, and the manufacturing of the GPU, while the cradle-to-grave assessment extends further to include the downstream distribution, the use phase (here the operational phase of the GPU in a data center setting, where AI is trained or inferred), and the End-of-Life (EoL) management of the GPU.

Results

Following the standardized LCA methodology, we evaluated the environmental impacts of training GPT-4 from cradle-to-grave across 16 environmental impact categories. The cradle-to-gate phase (see Fig. 2) dominates 6 out of 16 environmental impact categories, including freshwater eutrophication, human toxicity (both cancer and non-cancer), and mineral depletion.

Looking at the embodied emissions of the hardware more closely, especially the largest component, the heatsink, and the second smallest component, the GPU chip, stand out. The heatsink made from copper accounts for approximately 98% of the GPU’s total weight. As a heavy metal, the extraction and processing of copper further release toxic by-products, impacting both the ecosystem and human toxicity. For example, large-scale copper excavation results in land use impacts, and acid mine drainage contributes to surface water contamination.

Paradoxically, the GPU chip – one of the smallest components by weight – dominates 10 out of 16 environmental impact categories. This reflects the extraordinarily energy and resource-intensive semiconductor fabrication process. The A100's advanced 7nm process technology amplifies these manufacturing impacts, illustrating a fundamental challenge in chip design: the smaller the chip, the higher the energy and resource consumption required for production.

As recent research has already revealed, the energy consumption for AI training is a reason for concern. More precisely, the full life cycle CO₂ equivalent emissions of training GPT-4 are about 9700 tons of CO₂ equivalent (Falk et al., 2025). Yet, critics argue that adding the embodied emissions to the use phase related carbon emissions is unnecessary given the clear dominance of the data center’s energy consumption during GPT-4’s extensive training period of around 57-million-hours.

However, this misses a crucial point. The absolute environmental impact of embodied emissions (raw material acquisition and processing, manufacturing of hardware, transportation) might appear insignificant in relative terms with respect to CO₂ but is still substantial in absolute terms: 310 metric tons of CO₂. To give a sense of proportion of the embodied emissions of Nvidia’s A100 GPUs for the model training, consider this comparison: The average person would need 56 years to generate equivalent emissions.

Finally, the distribution and End-of-Life (EoL) stages (see Fig. 2) add little to the total impact across all categories from our assessment. However, this also needs to be put into perspective: First, distribution and EoL are being discounted with every hour the GPU is used. As soon as GPUs are used less, their impact increases. Second, our model doesn’t include illegal e-waste flows at the EoL, which would further increase EoL impacts if they could be properly quantified.

To better understand how significant the overall environmental impacts are in a real-world scenario, we used the Planetary Boundaries framework which defines the ‘safe operating space’ for humanity within Earth's natural limits. Simply put, we calculated what the accumulated training cost of GPT-4 means in terms of each person's fair share of our planet's annual environmental budget. Here are just three examples:

Climate impact: training GPT-4 is equivalent to the climate change budget of 11,522 people for an entire year

Fossil resource use: equivalent to the annual budget of 3,943 people

Freshwater ecotoxicity: equivalent to the annual budget of 1,421 people

Conclusion

Our research demonstrates that carbon-only accounting fundamentally misrepresents AI's true environmental impact, creating dangerous blind spots in sustainability discussions. Another important aspect is that AI development creates environmental winners and losers along geographic lines. The populations benefitting from (or at least having access to) AI services – primarily in wealthy Western countries – experience mainly the diffuse, global impacts of carbon emissions and climate change from AI’s energy consumption. Meanwhile, communities near mining operations, semiconductor manufacturing facilities, and processing plants receive minimal benefits from AI services but bear, in addition to (more severe) climate change impacts, also the concentrated burden of toxic pollutions with poorly understood impact pathways.

As AI becomes increasingly integrated into our economy and society, we cannot afford to optimize for only one environmental indicator while ignoring all others. True sustainability requires confronting the complete environmental picture, even when it reveals uncomfortable truths about geographic inequality and toxic impacts.

Building more sustainable AI will require fundamental shifts in how we measure, communicate, and manage its environmental impacts. This transformation demands greater transparency from both industry and developers – a challenge that extends beyond superficial corporate responsibility statements. For now, comprehensive environmental assessment requires intensive investigations, reverse engineering, and getting your hands dirty. This needs to change.

To steer AI development in a different direction, our hard-won data points must be translated into policy frameworks that can navigate the industry into a more sustainable AI future

Bibliography

Falk, S., Ekchajzer, D., Pirson, T., Lees-Perasso, E., Wattiez, A., Biber-Freudenberger, L., Luccioni, S., & van Wynsberghe, A. (2025). More than carbon: Cradle-to-grave environmental impacts of GenAI training on the Nvidia A100 GPU. arXiv Preprint. https://arxiv.org/abs/2509.00093

Grattafiori, A., et al. (2024). The Llama 3 herd of models. arXiv Preprint. https://arxiv.org/abs/2407.21783

Karydopoulos, P., & Pavlidis, V. F. (2025). Impact of prolonged thermal stress on Intel i7-6600U graphics performance. In 2025 IEEE International Conference on Consumer Electronics (ICCE) (pp. 1–6). IEEE.

Ostrouchov, G., Maxwell, D., Ashraf, R.A., Engelmann, C., Shankar, M., & Rogers, J.H. (2020). GPU lifetimes on Titan supercomputer: Survival analysis and reliability. In SC20:International Conference for High Performance Computing, Networking, Storage and Analysis (pp. 1–14). https://doi.org/10.1109/SC41405.2020.00045

About the Author

Sophia Falk

Sustainable AI Lab, University of Bonn